Building a CNN recognizing furniture from different Louis periods

Training and deploying a model using fastai, IPython widgets and voilà

- Context

- Content

- Building the dataset

- From Data to DataLoaders

- Training the actual model !

- Making a web app

- Conclusion

Context

This project was done after reading chapter 2 of fastai's book. It aims at classifying furniture from 4 different eras :

- Louis XIII

- Louis XIV

- Louis XV

- Louis XVI

The idea came from a friend of mine telling me there once was an endless debate at lunch about which era a particular piece of furniture came from.

Content

This notebook will take you through my building and deployment process using the fastai library. If you don't care about this and just want to check out the final result, please head here.

Building the dataset

To build the dataset, I used fatkun batch download image chrome plugin. There are many other ways to scrap the web for images, but in my case it turns out typing Louis XIV furniture (or should I say mobilier Louis XIV in french since this is a french thing) on Google image gives me a bunch of furniture from all eras. I found at that pinterest has some pretty good collections of images (for example here for Louis XIV styled furniture).

It turns out this plugin makes it easy to go on a page then download every images from it, including trash (but you have the ability to remove it before downloading). I recommend you to go on every website/page you need to build your data before downloading, since the plugin detects duplicates (also avoiding the same trash images time and time again).

I put each type of images in it's own folder as you can see.

path = Path('data/train')

path.ls()

fns = get_image_files(path) # gets all the image files recursively in path

failed = verify_images(fns)

failed

As you can see, our dataset doesn't have any, and I am not sure if it can happen while using this plugin. But if you ever do have some, execute the next step.

failed.map(Path.unlink)

From Data to DataLoaders

You should really go an read the chapter from the book if you want to understand what I am doing. I am basically, copy pasting their stuff, even this title is the same !

furniture = DataBlock(

blocks=(ImageBlock, CategoryBlock),

get_items=get_image_files,

splitter=RandomSplitter(valid_pct=0.2, seed=1), # Fixed seed for tuning hyperparameters

get_y=parent_label,

item_tfms=RandomResizedCrop(224, min_scale=0.5),

batch_tfms=aug_transforms())

dls = furniture.dataloaders(path)

Let's take a look at some of does images !

dls.valid.show_batch(max_n=4, nrows=1)

Okay, looking good ! But we have lots of data.

len(fns)

And among this data are probably some mislabeled ones and some that aren't even furniture ! To help us out in our cleaning process, we will use a quickly trained model.

learn = cnn_learner(dls, resnet18, metrics=error_rate)

learn.fine_tune(4)

Even if we quickly trained our model on a small architecture (resnet18), we can get an idea of what the model has trouble identifying by plotting a confusion matrix.

interp = ClassificationInterpretation.from_learner(learn)

interp.plot_confusion_matrix()

But we haven't cleaned anything yet so let's do that !

cleaner = ImageClassifierCleaner(learn)

cleaner

for idx in cleaner.delete(): cleaner.fns[idx].unlink() # Delete files you selected as Delete

for idx,cat in cleaner.change(): shutil.move(str(cleaner.fns[idx]), path/cat) # Change label from mislabeled images

I redid this whole procedure another time, just to be sure. And I probably should do it again. Unfortunately, I am no furniture expert and can't really relabel mislabeled data so I guess my dataset is full of it.

path = Path('data/train')

fns = get_image_files(path)

len(fns)

Our dataset is smaller now that we removed 60 images !

furniture = DataBlock(

blocks=(ImageBlock, CategoryBlock),

get_items=get_image_files,

splitter=RandomSplitter(valid_pct=0.2, seed=1), # Fixed seed for tuning hyperparameters

get_y=parent_label,

item_tfms=RandomResizedCrop(224, min_scale=0.5),

batch_tfms=aug_transforms())

dls = furniture.dataloaders(path,bs=32)

learn = cnn_learner(dls, resnet50, metrics=error_rate)

learn.fine_tune(8, cbs=[ShowGraphCallback()])

learn.save('resnet50-13_error_rate')

We used transfer learning, using resnet50 as our pretrained model (resnet50 architecture with pretrained weights from the ImageNet dataset) and a batch size of 32 (since I am training on my personal GPU RTX2060 that couldn't handle a larger batch size). As you can see, our error_rate on the validation set is aroud 13%, which is not great, but considering our approximate dataset, is understandable.

learn.export()

path = Path()

path.ls(file_exts = '.pkl')

learn_inf = load_learner(path/'export.pkl')

To make our app, we will use IPython widgets and Voilà.

btn_upload = widgets.FileUpload()

out_pl = widgets.Output()

lbl_pred = widgets.Label()

btn_run = widgets.Button(description='Classify')

Let's define what uploading an image does.

def on_data_change(change):

lbl_pred.value = ''

img = PILImage.create(btn_upload.data[-1])

out_pl.clear_output()

with out_pl: display(img.to_thumb(128,128))

pred,pred_idx,probs = learn_inf.predict(img)

lbl_pred.value = f'Prediction: {pred}; Probability: {probs[pred_idx]:.04f}'

btn_upload.observe(on_data_change, names=['data'])

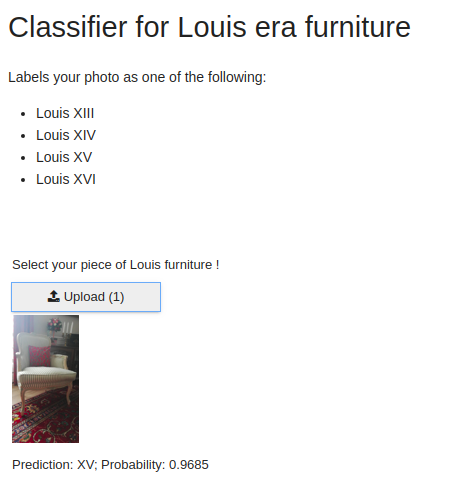

display(VBox([widgets.Label('Select your piece of Louis furniture !'), btn_upload, out_pl, lbl_pred]))

Above is what the user sees when he launches our app.

When uploading an image, the whole thing looks like this !

Now, we just have to deploy our app ! Voilà can transform you jupyter notebook into a simple working web app. Of course we aren't transforming this entire notebook but instead this one here.

We are deploying our app on Binder. Check out these documentations on how to use voilà with Binder.

And TADAAAA! That's all it took !

Check out the working web app

Conclusion

What do I take out of this small project ?

Things that worked great

- training

- building app

- deploying app

Things that didn't work great / were hard

- getting good data (lots of mislabeled data)

- cleaning it up (lots of out-of-domain data)

- finding the right number of epoch felt a little random

Things to look into:

- Find a way to get cleaner data

- Perhaps use a different slice than default for fine tuning

- Make the app cleaner